Prologue

The code that our publishers post on their websites loads our adcode that is responsible for opening PopAds popunders. In order to make it load as fast as possible, we use a Content Delivery Network.

It is a kind of hosting provider that hosts static content(like our Javascript adcode) on many servers around the globe, so each visitor downloads it from the closest location.

Another benefit of using a CDN is its distributed architecture, which

should provide high reliability and stability.

In case the CDN stops serving our adcode, our system is unable to open popunders and effectively goes down.

Incident

Just few weeks ago, when we came up with the idea of full redundant adcode utilizing more than one Content Delivery Network, we did not expect it to be "tested in battle" so soon.

We thought it would be one of those many redundancy features that we have there just-in-case, but do not really expect them to be ever needed. The night between 29th and 30th May (GMT) showed that even highly distributed CDN networks with hundreds of Gbps bandwidth can stop working.

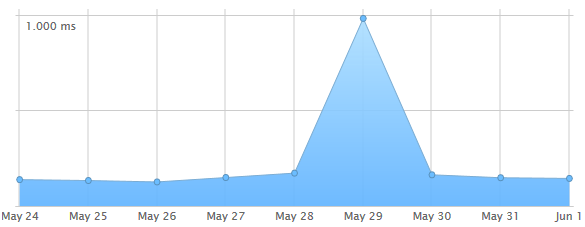

In the late evening on 29th May 2013, our automated monitoring system reported increased latency on our primary CDN as well as short downtimes every few minutes. After contacting the CDN provider, it has turned out that they are under a heavy DDOS attack and trying to mitigate it.

In other words, our primary CDN is not stable and there is nothing we can do, but wait for the provider to resolve the problem.

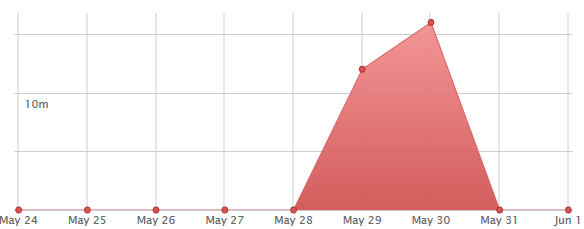

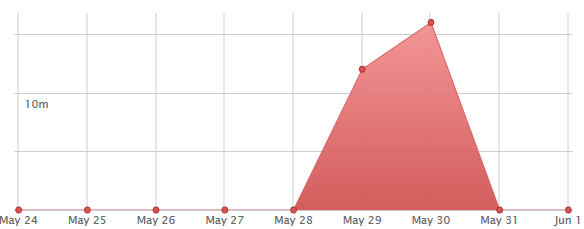

Downtime chart of our primary CDN(via PingDom)

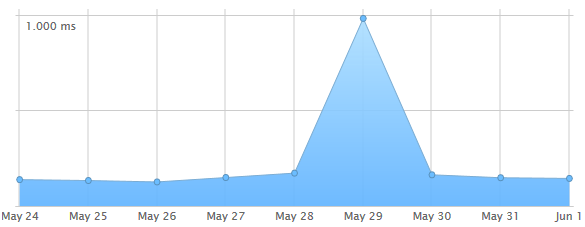

Latency chart of our primary CDN(via PingDom)

Not more than a month earlier, we somehow managed to forsee a situation like this and created a new, redundant publisher code that will download our adcode from secondary CDN if the primary is not reachable.

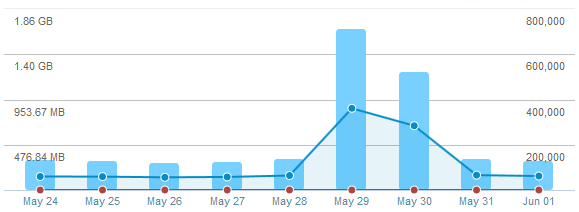

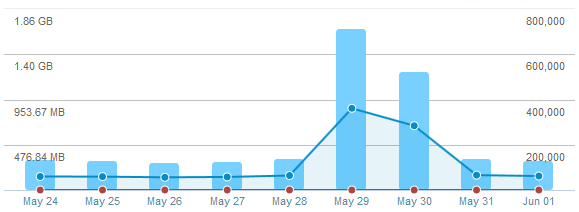

At the time of the incident, our new publisher code automatically switched to the backup CDN and the issues of our primary CDN did not cause any drop in traffic handled by PopAds.

Traffic chart from our backup CDN

Statistics

- Our primary CDN was completely down for 28 minutes,

- For more than 24 hours, the primary CDN was much slower than usually,

- More than 500 000 adcode requests were automatically and instantly rerouted to our backup CDN.